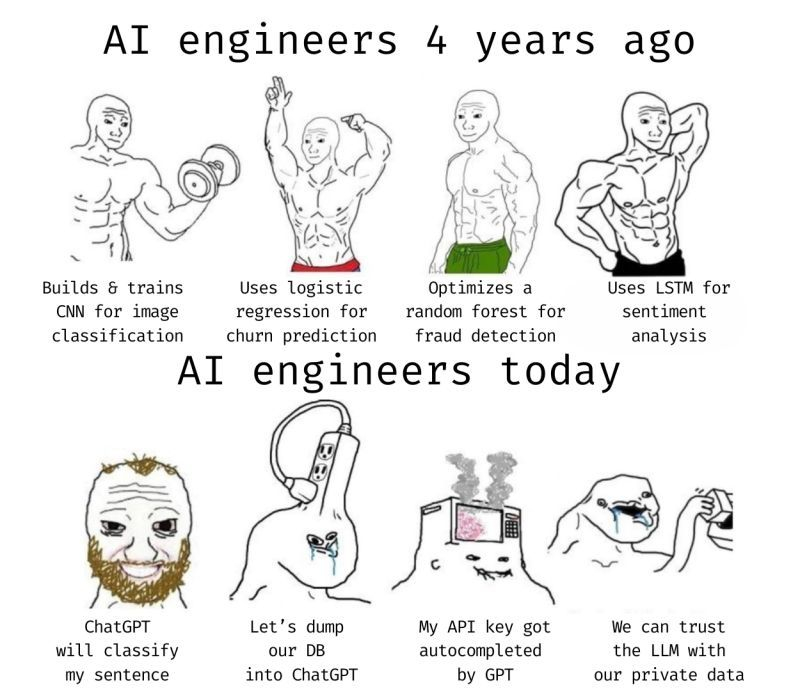

𝗔𝗜 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝘀 𝗧𝗵𝗲𝗻 𝘃𝘀. 𝗡𝗼𝘄 𝗪𝗵𝗮𝘁 𝗖𝗵𝗮𝗻𝗴𝗲𝗱 𝗮𝗻𝗱 𝗪𝗵𝘆 𝗜𝘁 𝗠𝗮𝘁𝘁𝗲𝗿𝘀

Just a few years ago, AI engineers were deep into building models from scratch:

• Training 𝗖𝗡𝗡𝘀 for image classification

• Using 𝗹𝗼𝗴𝗶𝘀𝘁𝗶𝗰 𝗿𝗲𝗴𝗿𝗲𝘀𝘀𝗶𝗼𝗻 for churn prediction

• Optimizing 𝗿𝗮𝗻𝗱𝗼𝗺 𝗳𝗼𝗿𝗲𝘀𝘁𝘀 for fraud detection

• Implementing 𝗟𝗦𝗧𝗠𝘀 for sentiment analysis

These tasks required deep mathematical knowledge, coding expertise, and hands-on experience with data pipelines.

𝗙𝗮𝘀𝘁 𝗳𝗼𝗿𝘄𝗮𝗿𝗱 𝘁𝗼 𝘁𝗼𝗱𝗮𝘆:

Much of that complexity is abstracted away by 𝗟𝗮𝗿𝗴𝗲 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹𝘀 (𝗟𝗟𝗠𝘀) like ChatGPT. Instead of writing models line by line, many AI tasks are now reduced to calling an API or fine-tuning pre-trained models.

This shift has sparked debate:

• Some argue AI engineering has become “too easy.”

• Others see it as 𝗱𝗲𝗺𝗼𝗰𝗿𝗮𝘁𝗶𝘇𝗮𝘁𝗶𝗼𝗻—making AI accessible to far more people.

𝗪𝗵𝗮𝘁 𝘁𝗵𝗶𝘀 𝗺𝗲𝗮𝗻𝘀 𝗳𝗼𝗿 𝗽𝗿𝗼𝗳𝗲𝘀𝘀𝗶𝗼𝗻𝗮𝗹𝘀 (𝗯𝗲𝗴𝗶𝗻𝗻𝗲𝗿𝘀 → 𝗲𝘅𝗽𝗲𝗿𝘁𝘀):

𝟭 𝗕𝗲𝗴𝗶𝗻𝗻𝗲𝗿𝘀: You can start experimenting with powerful models without a PhD in ML. Focus on prompt engineering, data handling, and ethical use.

𝟮 𝗜𝗻𝘁𝗲𝗿𝗺𝗲𝗱𝗶𝗮𝘁𝗲 𝗽𝗿𝗮𝗰𝘁𝗶𝘁𝗶𝗼𝗻𝗲𝗿𝘀: Learn how to integrate LLMs into real systems (APIs, apps, automation). The value lies in application, not just model building.

𝟯 𝗔𝗱𝘃𝗮𝗻𝗰𝗲𝗱 𝗽𝗿𝗼𝗳𝗲𝘀𝘀𝗶𝗼𝗻𝗮𝗹𝘀: Shift towards scalability, optimization, and governance—how to make LLMs safe, efficient, and business-ready.

𝗧𝗵𝗲 𝗯𝗮𝗹𝗮𝗻𝗰𝗲 𝗵𝗮𝘀 𝗰𝗵𝗮𝗻𝗴𝗲𝗱:

• Before → Build models

• Now → Apply, adapt, and govern models

The core skill today isn’t just “training models”—it’s 𝘂𝗻𝗱𝗲𝗿𝘀𝘁𝗮𝗻𝗱𝗶𝗻𝗴 𝗽𝗿𝗼𝗯𝗹𝗲𝗺𝘀, 𝗱𝗮𝘁𝗮, 𝗮𝗻𝗱 𝗵𝗼𝘄 𝘁𝗼 𝗿𝗲𝘀𝗽𝗼𝗻𝘀𝗶𝗯𝗹𝘆 𝗹𝗲𝘃𝗲𝗿𝗮𝗴𝗲 𝗽𝗼𝘄𝗲𝗿𝗳𝘂𝗹 𝗔𝗜 𝘁𝗼𝗼𝗹𝘀 𝗮𝘁 𝘀𝗰𝗮𝗹𝗲.

Whether you’re just starting or already working in the field, the key takeaway is 𝗔𝗜 𝗶𝘀 𝗺𝗼𝘃𝗶𝗻𝗴 𝗳𝗿𝗼𝗺 𝗺𝗼𝗱𝗲𝗹-𝗰𝗲𝗻𝘁𝗿𝗶𝗰 𝘁𝗼 𝗮𝗽𝗽𝗹𝗶𝗰𝗮𝘁𝗶𝗼𝗻-𝗰𝗲𝗻𝘁𝗿𝗶𝗰. The winners will be those who can bridge technology with real-world impact.

𝗕𝗼𝗻𝘂𝘀 𝗧𝗶𝗽: If you're looking to level up in your Ai career, explore 𝗔𝗜 & 𝗗𝗮𝘁𝗮 𝗦𝗰𝗶𝗲𝗻𝗰𝗲 𝗖𝗼𝘂𝗿𝘀𝗲 𝘄𝗶𝘁𝗵 𝗖𝗲𝗿𝘁𝗶𝗳𝗰𝗮𝘁𝗶𝗼𝗻 from 𝗧𝗲𝗰𝗵𝗩𝗶𝗱𝘃𝗮𝗻 to stay ahead of industry trends.

Just a few years ago, AI engineers were deep into building models from scratch:

• Training 𝗖𝗡𝗡𝘀 for image classification

• Using 𝗹𝗼𝗴𝗶𝘀𝘁𝗶𝗰 𝗿𝗲𝗴𝗿𝗲𝘀𝘀𝗶𝗼𝗻 for churn prediction

• Optimizing 𝗿𝗮𝗻𝗱𝗼𝗺 𝗳𝗼𝗿𝗲𝘀𝘁𝘀 for fraud detection

• Implementing 𝗟𝗦𝗧𝗠𝘀 for sentiment analysis

These tasks required deep mathematical knowledge, coding expertise, and hands-on experience with data pipelines.

𝗙𝗮𝘀𝘁 𝗳𝗼𝗿𝘄𝗮𝗿𝗱 𝘁𝗼 𝘁𝗼𝗱𝗮𝘆:

Much of that complexity is abstracted away by 𝗟𝗮𝗿𝗴𝗲 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹𝘀 (𝗟𝗟𝗠𝘀) like ChatGPT. Instead of writing models line by line, many AI tasks are now reduced to calling an API or fine-tuning pre-trained models.

This shift has sparked debate:

• Some argue AI engineering has become “too easy.”

• Others see it as 𝗱𝗲𝗺𝗼𝗰𝗿𝗮𝘁𝗶𝘇𝗮𝘁𝗶𝗼𝗻—making AI accessible to far more people.

𝗪𝗵𝗮𝘁 𝘁𝗵𝗶𝘀 𝗺𝗲𝗮𝗻𝘀 𝗳𝗼𝗿 𝗽𝗿𝗼𝗳𝗲𝘀𝘀𝗶𝗼𝗻𝗮𝗹𝘀 (𝗯𝗲𝗴𝗶𝗻𝗻𝗲𝗿𝘀 → 𝗲𝘅𝗽𝗲𝗿𝘁𝘀):

𝟭 𝗕𝗲𝗴𝗶𝗻𝗻𝗲𝗿𝘀: You can start experimenting with powerful models without a PhD in ML. Focus on prompt engineering, data handling, and ethical use.

𝟮 𝗜𝗻𝘁𝗲𝗿𝗺𝗲𝗱𝗶𝗮𝘁𝗲 𝗽𝗿𝗮𝗰𝘁𝗶𝘁𝗶𝗼𝗻𝗲𝗿𝘀: Learn how to integrate LLMs into real systems (APIs, apps, automation). The value lies in application, not just model building.

𝟯 𝗔𝗱𝘃𝗮𝗻𝗰𝗲𝗱 𝗽𝗿𝗼𝗳𝗲𝘀𝘀𝗶𝗼𝗻𝗮𝗹𝘀: Shift towards scalability, optimization, and governance—how to make LLMs safe, efficient, and business-ready.

𝗧𝗵𝗲 𝗯𝗮𝗹𝗮𝗻𝗰𝗲 𝗵𝗮𝘀 𝗰𝗵𝗮𝗻𝗴𝗲𝗱:

• Before → Build models

• Now → Apply, adapt, and govern models

The core skill today isn’t just “training models”—it’s 𝘂𝗻𝗱𝗲𝗿𝘀𝘁𝗮𝗻𝗱𝗶𝗻𝗴 𝗽𝗿𝗼𝗯𝗹𝗲𝗺𝘀, 𝗱𝗮𝘁𝗮, 𝗮𝗻𝗱 𝗵𝗼𝘄 𝘁𝗼 𝗿𝗲𝘀𝗽𝗼𝗻𝘀𝗶𝗯𝗹𝘆 𝗹𝗲𝘃𝗲𝗿𝗮𝗴𝗲 𝗽𝗼𝘄𝗲𝗿𝗳𝘂𝗹 𝗔𝗜 𝘁𝗼𝗼𝗹𝘀 𝗮𝘁 𝘀𝗰𝗮𝗹𝗲.

Whether you’re just starting or already working in the field, the key takeaway is 𝗔𝗜 𝗶𝘀 𝗺𝗼𝘃𝗶𝗻𝗴 𝗳𝗿𝗼𝗺 𝗺𝗼𝗱𝗲𝗹-𝗰𝗲𝗻𝘁𝗿𝗶𝗰 𝘁𝗼 𝗮𝗽𝗽𝗹𝗶𝗰𝗮𝘁𝗶𝗼𝗻-𝗰𝗲𝗻𝘁𝗿𝗶𝗰. The winners will be those who can bridge technology with real-world impact.

𝗕𝗼𝗻𝘂𝘀 𝗧𝗶𝗽: If you're looking to level up in your Ai career, explore 𝗔𝗜 & 𝗗𝗮𝘁𝗮 𝗦𝗰𝗶𝗲𝗻𝗰𝗲 𝗖𝗼𝘂𝗿𝘀𝗲 𝘄𝗶𝘁𝗵 𝗖𝗲𝗿𝘁𝗶𝗳𝗰𝗮𝘁𝗶𝗼𝗻 from 𝗧𝗲𝗰𝗵𝗩𝗶𝗱𝘃𝗮𝗻 to stay ahead of industry trends.

🚀 𝗔𝗜 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝘀 𝗧𝗵𝗲𝗻 𝘃𝘀. 𝗡𝗼𝘄 𝗪𝗵𝗮𝘁 𝗖𝗵𝗮𝗻𝗴𝗲𝗱 𝗮𝗻𝗱 𝗪𝗵𝘆 𝗜𝘁 𝗠𝗮𝘁𝘁𝗲𝗿𝘀

Just a few years ago, AI engineers were deep into building models from scratch:

• Training 𝗖𝗡𝗡𝘀 for image classification

• Using 𝗹𝗼𝗴𝗶𝘀𝘁𝗶𝗰 𝗿𝗲𝗴𝗿𝗲𝘀𝘀𝗶𝗼𝗻 for churn prediction

• Optimizing 𝗿𝗮𝗻𝗱𝗼𝗺 𝗳𝗼𝗿𝗲𝘀𝘁𝘀 for fraud detection

• Implementing 𝗟𝗦𝗧𝗠𝘀 for sentiment analysis

These tasks required deep mathematical knowledge, coding expertise, and hands-on experience with data pipelines.

🔮 𝗙𝗮𝘀𝘁 𝗳𝗼𝗿𝘄𝗮𝗿𝗱 𝘁𝗼 𝘁𝗼𝗱𝗮𝘆:

Much of that complexity is abstracted away by 𝗟𝗮𝗿𝗴𝗲 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹𝘀 (𝗟𝗟𝗠𝘀) like ChatGPT. Instead of writing models line by line, many AI tasks are now reduced to calling an API or fine-tuning pre-trained models.

This shift has sparked debate:

• Some argue AI engineering has become “too easy.”

• Others see it as 𝗱𝗲𝗺𝗼𝗰𝗿𝗮𝘁𝗶𝘇𝗮𝘁𝗶𝗼𝗻—making AI accessible to far more people.

💡 𝗪𝗵𝗮𝘁 𝘁𝗵𝗶𝘀 𝗺𝗲𝗮𝗻𝘀 𝗳𝗼𝗿 𝗽𝗿𝗼𝗳𝗲𝘀𝘀𝗶𝗼𝗻𝗮𝗹𝘀 (𝗯𝗲𝗴𝗶𝗻𝗻𝗲𝗿𝘀 → 𝗲𝘅𝗽𝗲𝗿𝘁𝘀):

𝟭 𝗕𝗲𝗴𝗶𝗻𝗻𝗲𝗿𝘀: You can start experimenting with powerful models without a PhD in ML. Focus on prompt engineering, data handling, and ethical use.

𝟮 𝗜𝗻𝘁𝗲𝗿𝗺𝗲𝗱𝗶𝗮𝘁𝗲 𝗽𝗿𝗮𝗰𝘁𝗶𝘁𝗶𝗼𝗻𝗲𝗿𝘀: Learn how to integrate LLMs into real systems (APIs, apps, automation). The value lies in application, not just model building.

𝟯 𝗔𝗱𝘃𝗮𝗻𝗰𝗲𝗱 𝗽𝗿𝗼𝗳𝗲𝘀𝘀𝗶𝗼𝗻𝗮𝗹𝘀: Shift towards scalability, optimization, and governance—how to make LLMs safe, efficient, and business-ready.

⚖️ 𝗧𝗵𝗲 𝗯𝗮𝗹𝗮𝗻𝗰𝗲 𝗵𝗮𝘀 𝗰𝗵𝗮𝗻𝗴𝗲𝗱:

• Before → Build models

• Now → Apply, adapt, and govern models

The core skill today isn’t just “training models”—it’s 𝘂𝗻𝗱𝗲𝗿𝘀𝘁𝗮𝗻𝗱𝗶𝗻𝗴 𝗽𝗿𝗼𝗯𝗹𝗲𝗺𝘀, 𝗱𝗮𝘁𝗮, 𝗮𝗻𝗱 𝗵𝗼𝘄 𝘁𝗼 𝗿𝗲𝘀𝗽𝗼𝗻𝘀𝗶𝗯𝗹𝘆 𝗹𝗲𝘃𝗲𝗿𝗮𝗴𝗲 𝗽𝗼𝘄𝗲𝗿𝗳𝘂𝗹 𝗔𝗜 𝘁𝗼𝗼𝗹𝘀 𝗮𝘁 𝘀𝗰𝗮𝗹𝗲.

👉 Whether you’re just starting or already working in the field, the key takeaway is 𝗔𝗜 𝗶𝘀 𝗺𝗼𝘃𝗶𝗻𝗴 𝗳𝗿𝗼𝗺 𝗺𝗼𝗱𝗲𝗹-𝗰𝗲𝗻𝘁𝗿𝗶𝗰 𝘁𝗼 𝗮𝗽𝗽𝗹𝗶𝗰𝗮𝘁𝗶𝗼𝗻-𝗰𝗲𝗻𝘁𝗿𝗶𝗰. The winners will be those who can bridge technology with real-world impact.

🚀 𝗕𝗼𝗻𝘂𝘀 𝗧𝗶𝗽: If you're looking to level up in your Ai career, explore 𝗔𝗜 & 𝗗𝗮𝘁𝗮 𝗦𝗰𝗶𝗲𝗻𝗰𝗲 𝗖𝗼𝘂𝗿𝘀𝗲 𝘄𝗶𝘁𝗵 𝗖𝗲𝗿𝘁𝗶𝗳𝗰𝗮𝘁𝗶𝗼𝗻 from 𝗧𝗲𝗰𝗵𝗩𝗶𝗱𝘃𝗮𝗻 to stay ahead of industry trends.

0 Commentaires

0 Parts

32 Vue

0 Aperçu